Search the Community

Showing results for tags 'Large Files'.

-

#Include <file.au3> #Include <Array.au3> Local $nobrainArray $var = _FileReadToArray("example.txt", $nobrainArray) $split = StringSplit($var, ":"); split by colon? _ArrayDisplay($split) Its getting later and I am getting more and more tired so I think I should go to bed and give this another look tmr. but if someone could help me i'd be grateful! randomfirstname:randomlastname\nrandomfirstname:randomlastname\nrandomfirstname:randomlastname\nrandomfirstname:randomlastname\nrandomfirstname:randomlastname\nrandomfirstname:randomlastname\nrandomfirstname:randomlastname\nrandomfirstname:randomlastname\nrandomfirstname:randomlastname\n----------------------------------------------------------------------\n\nThe topic can be found here:\nhttps://www.websitehere.com\n\n\nYou can unsubscribe at any time here: https://www.websitehere.com/unsubscribe/Zm9ydW1zO2ZvcnVtczs0MzszOTc0MTA7Mzk3NDEwO25pa29sYXppbmRvQGdtYWlsLmNvbQ,,/\n\nIf you are not following any forums and wish to stop receiving notifications, uncheck the setting\n\"Send me news and information\" found in \'My Settings\' under \'Notification Options\'.\n',545627,'followed_forums','https://www.websitehere.com/topic/','forums','forums',43,'4745c9f0607baec3e8bc38f47d07f9bd'),(622776,49813,1457299052,1,'<a href=\'https://www.websitehere.com/!545627\'>Antepliemmo</a> posted topic <a href=\'https://www.websitehere.com\'>\n\n----------------------------------------------------------------------\n As you can see this is very messy! There is random first names and last names everywhere and then there is a lot of junk.... I am extracting all the names/last names for a buddy, but I just can't seem to figure it out. Any help is appreciated, I'll keep working on this tomorrow again wish a fresh mindset! Regards Ryuk

-

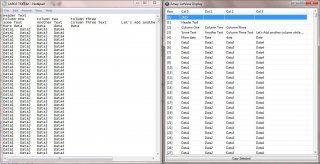

This script is fairly straightforward. If you ever worked with large files before then this may be of help. By large I mean files of 2 MB or so. Granted this doesn't sound so big but going through the file and parsing it to a 2D array all at once took an astronomical amount of time so I wrote my own function to handle this. I discovered that chunking a large array can boost the performance of iterating through the elements and theoretically this should maintain the performance no matter how large the array size is. I know there is room for improvement so please feel free to contribute! Note: I wasn't able to fully test this on larger files such as 200 MB in size due to AutoIt complaining about an error allocating memory while executing _FileReadToArray(). Any help is appreciated. Features: Chunking (Performance will never degrade over time; I.E. Capable of parsing 200 lines or 20,000 and no performance hit will occur) Automatically re-sizes to dynamic columns Preserves Columns while parsing FAST!!!!!! (I can parse a file that contains 24,000 lines with variable columns up to 8 columns and it will finish under a second.) Script: _ArrayTo2DArray.au3 Example usage: Local $aExport ;Initialize array _FileReadToArray("LARGE TEXT.txt", $aExport) ;Returns 1D array of file Local $aSheet = _ArrayTo2DArray($aExport) ;Converts it to 2D Example Text File: LARGE TEXT.txt This script was inspired by >this post. *Updated attachment: Minor bug fixes* *UPDATE June 6, 2013: I apologize! I just realized I made a complete mess of the algorithm. I'm working on a fix now.* *UPDATE June 6, 2013: Bug fixed! It's attached in the post now.

- 2 replies

-

- 2D Array

- Large Files

-

(and 1 more)

Tagged with: